1. Mobile First Algo is Nuanced

It may be inappropriate to generalize what kind of content is best for a mobile first index. First, consider that every search query is different. Here is a sample of a few kinds of queries:- Long tail queries

- Informational queries (what actor starred in…)

- Local search queries

- Transactional queries

- Research queries

- How do I queries?

One simply cannot generalize and say that Google prefers short form content because that’s what mobile users prefer. Thinking in terms of what most mobile users might prefer is a great start. But the next step involves thinking about the problem that specific search query is trying to solve and what the best solution for the most users is going to be.

And as you’ll read below, for some queries the most popular answer might vary according to time. Google’s mobile first announcement explicitly stated that for some queries a desktop version might be appropriate.

2. Satisfy The Most Users

Identifying the problem users are trying to solve can lead to multiple answers. If you look at the SERPs you will see there are different kinds of sites. Some might be review sites, some might be informational, some might be educational.Those differences are indications that there multiple problems users are trying to solve. What’s helpful is that Google is highly likely to order the SERPs according to the most popular user intent, the answer that satisfies the most users.

So if you want to know which kind of answer to give on a page, take a look at the SERPs and let the SERPs guide you. Sometimes this means that most users tend to be on mobile and short form content works best. Sometimes it’s fifty/fifty and most users prefer in depth content or multiple product choices or less product choices.

Don’t be afraid of the mobile index. It’s not changing much. It’s simply adding an additional layer, to understand which kind of content satisfies the typical user (mobile, laptop, desktop, combination) and the user intent. It’s just an extra step to understanding who the most users are and from there asking how to satisfy them, that’s all.

3. Time Influences Observed User Intent

Every search query demands a specific kind of result because the user intent behind each query is different. Mobile adds an additional layer of intent to search queries. In a Think with Google publication about how people use their devices (PDF), Google stated this“The proliferation of devices has changed the way people interact with the world around them. With more touchpoints than ever before, it’s critical that marketers have a full understanding of how people use devices so that they can be here and be useful for their customers in the moments that matter.”

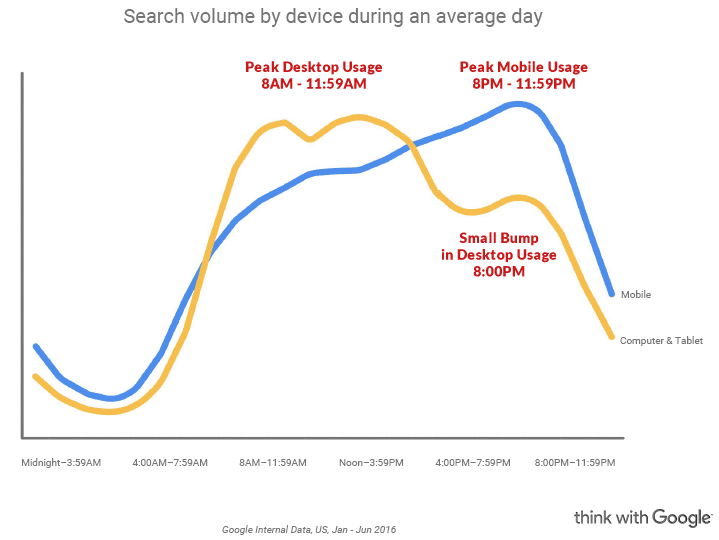

Time plays a role in how the user intent changes. The time of day that a query is made can influence what device that user is using, which in turn says something about that users needs in terms of speed, convenience and information needs. Google’s research from the above cited document states this:

“Mobile leads in the morning, but computers become dominant around 8 a.m. when people might start their workday. Mobile takes the lead again in the late afternoon when people might be on the go, and continues to increase into the evening, spiking around primetime viewing hours.”This is what I mean when I say that Google’s mobile index is introducing a new layer of what it means to be relevant. It’s not about your on page keywords being relevant to what a user is typing. A new consideration is about how your web page is relevant to someone at a certain time of day on a certain device and how you’re going to solve the most popular information need at that time of day.

Google’s March 2018 official mobile first announcement stated it like this:

“We may show content to users that’s not mobile-friendly or that is slow loading if our many other signals determine it is the most relevant content to show.”What signals is Google looking at? Obviously, the device itself could be a signal. But also, according to Google, time of day might be a signal because not only does device usage fluctuate during the day but the intent does too.

4. Defining Relevance in a Mobile First Index

Google’s focus on the User Intent 100% changes what the phrase “relevant content” means, especially in a mobile first index. People on different devices search for different things. It’s not that the mobile index itself is changing what is going to be ranked. The user intent for search queries is constantly changing, sometimes in response to Google’s ability to better understand what that intent is.Some of those core algorithm updates could be changes related to how Google understands what satisfies users. You know how SEOs are worrying about click through data? They are missing an important metric. CTR is not the only measurement tool search engines have.

Do you think CTR 100% tells what’s going on in a mobile first index? How can Google understand if a SERP solved a user’s problem if the user does not even click through?

That’s where a metric similar to Viewport Time comes in. Search engines have been using variations of Viewport Time to understand mobile users. Yet the SEO industry is still wringing it’s hands about CTR. Ever feel like a piece of the ranking puzzle is missing? This is one of those pieces.

The Best of SEJSUMMIT Webinar - Wed, March 28, 2:00 PM EST

Join Duane Forrester on the first-ever BOSS webinar as he offers actionable takeaways and thought-provoking content on the future of voice search.

Join Duane Forrester on the first-ever BOSS webinar as he offers actionable takeaways and thought-provoking content on the future of voice search.

ADVERTISEMENT

Google’s

understanding of what satisfies users is constantly improving. And that

impacts the rankings. How we provide the best experience for those

queries should change, too.An important way those solutions have changed involves understanding the demographics of who is using a specific kind of device. What does it mean when someone asks a question on one device versus another device? One answer is that the age group might influence who is asking a certain question on a certain device.

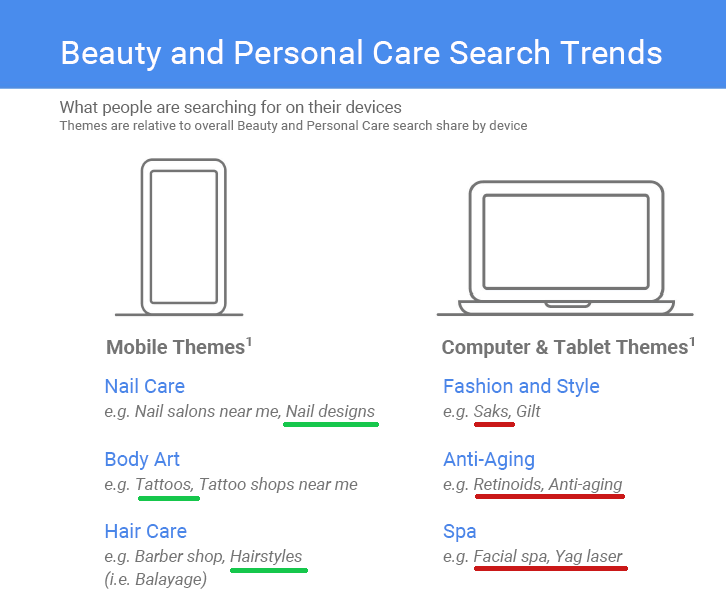

For example, Google shared the following insights about mobile and desktop users (PDF). Searchers in the Beauty and Health niche search for different kinds of things according to device.

Examples of top beauty & health queries on mobile devices are for topics related to tattoos and nail salons. Examples of Beauty & Health desktop queries indicate an older user because they’re searching for stores like Saks and beauty products such anti-aging creams.

It’s naïve to worry about whether you have enough synonyms on your page. That’s not what relevance is about. Relevance is not about keyword synonyms. Relevance is often about problem solving at certain times of day and within specific devices to specific age groups. You can’t solve that by salting your web page with synonyms.

5. Mobile First is not About User Friendliness

An important quality of the mobile first index is convenience when satisfying a user intent. Does the user intent behind the search query demand a quick answer or a shorter answer? Does the web page make it hard to find the answer? Does the page enable comparison between different products?Now answer those questions by adding the phrase, on mobile, on a tablet, on a desktop and so on.

6. Would a Visitor Understand your Content?

Google can know if a user understands your content. Users vote with their click and viewport time data and quality raters create another layer of data about certain queries. With enough data Google can predict it what a user might find useful. This is where machine learning comes in.Here’s what Google says about machine learning in the context of User Experience (UX):

Machine learning is the science of making predictions based on patterns and relationships that’ve been automatically discovered in data.If content that is difficult to read is a turn-off, that may be reflected in what sites are ranked and what sites are not. If the topic is complex and a complex answer solves the problem then that might be judged the best answer.

I know we’re talking about Google but it’s useful to understand the state of the art of search in general. Microsoft published a fascinating study about teaching a machine to predict what a will find interesting. The paper is titled, Predicting Interesting Things in Text. This research focused on understanding what made content interesting and what caused users to keep clicking to another page. In other words, it was about training a machine to understand what satisfies users. Here’s a synopsis:

We propose models of “interestingness”, which aim to predict the level of interest a user has in the various text spans in a document. We obtain naturally occurring interest signals by observingIn general, I find good results with content that can be appreciated by the widest variety of people. This isn’t strictly a mobile first consideration but it is increasingly important in an Internet where so people of diverse backgrounds are accessing a site with multiple intents multiple kinds of devices. Achieving universal popularity becomes increasingly difficult so it may be advantageous to appeal to the broadest array of people in a mobile first index.

user browsing behavior in clicks from one page to another. We cast the problem of predicting interestingness as a discriminative learning problem over this data.

We train and test our models on millions of real world transitions between Wikipedia documents as observed from web browser session logs. On the task of predicting which spans are of most interest to users, we show significant improvement over various baselines and highlight the value of our latent semantic model.

7. Google’s Algo Intent Hasn’t Changed

Looked at a certain way, it could be said that Google’s desire to show users what they want to see has remained consistent. What has changed is the users age, what they desire, when they desire it and what device they desire it on. So the intent of Google’s algorithm likely remains the same.The mobile first index can be seen as a logical response to how users have changed. It’s backwards to think of it as Google forcing web publishers to adapt to Google. What’s really happening is that web publishers must adapt to how their users have changed. Ultimately that is the best way to think of the mobile first index. Not as a response to what Google wants but to approach the problem as a response to the evolving needs of the user.

Pexels

Pexels

In 2017, Google paid nearly $3 million to individuals and researchers as part of their Vulnerability Reward Program (

In 2017, Google paid nearly $3 million to individuals and researchers as part of their Vulnerability Reward Program (

I attended the SEO Ranking Factors session at this year’s

I attended the SEO Ranking Factors session at this year’s  Any discussion of ranking factors raises a lot of emotions and

controversy, and this was no exception. It is important to understand

how to use information from these types of sessions: None of the data is

fully conclusive, and it should always be taken with a grain of salt.

Any discussion of ranking factors raises a lot of emotions and

controversy, and this was no exception. It is important to understand

how to use information from these types of sessions: None of the data is

fully conclusive, and it should always be taken with a grain of salt. As you can see, the study reports the keyword in the title in only 35 percent of the pages they studied.

As you can see, the study reports the keyword in the title in only 35 percent of the pages they studied. The big point that emerges from this slide is how infrequently

keyword phrases are being used in content that ranks well, even for

high-volume terms. There is some great good news in this: It suggests

link spamming on the web is down. As an industry, we should celebrate

that. It also suggests ranking highly can be done without getting a lot

of rich anchor text links to your page.

The big point that emerges from this slide is how infrequently

keyword phrases are being used in content that ranks well, even for

high-volume terms. There is some great good news in this: It suggests

link spamming on the web is down. As an industry, we should celebrate

that. It also suggests ranking highly can be done without getting a lot

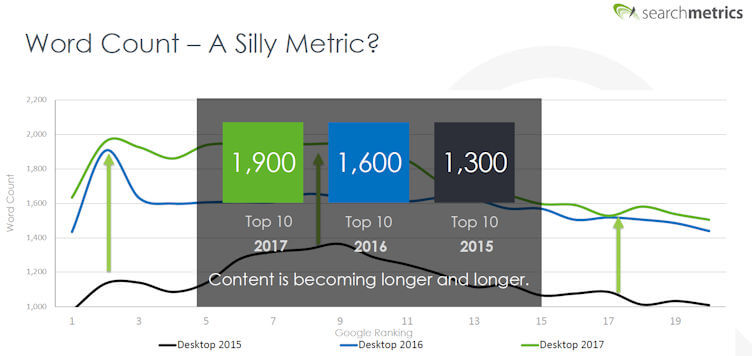

of rich anchor text links to your page. In spite of all the discussion around making content shorter for

mobile, this data suggests longer content length helps with ranking. The

study shows the top three ranking positions host longer content.

In spite of all the discussion around making content shorter for

mobile, this data suggests longer content length helps with ranking. The

study shows the top three ranking positions host longer content.

The data supports the notion links still matter a great deal in terms of determining where a webpage ranks.

The data supports the notion links still matter a great deal in terms of determining where a webpage ranks. During much of his presentation, Marcus reviewed how different market

sectors vary. The sectors he looked at were dating, wine, recipes,

furniture, car tuning and divorce.

During much of his presentation, Marcus reviewed how different market

sectors vary. The sectors he looked at were dating, wine, recipes,

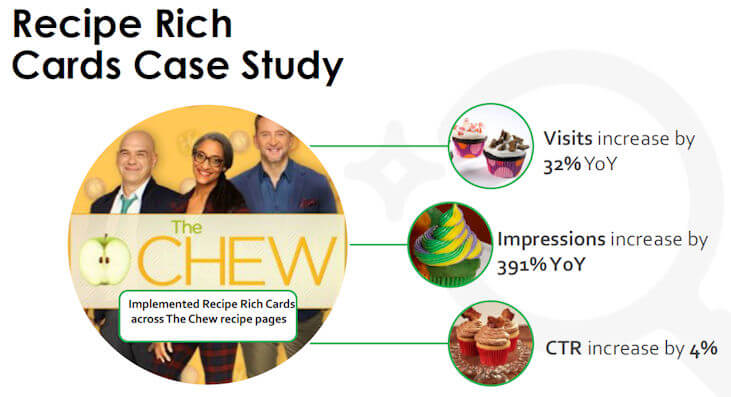

furniture, car tuning and divorce. The recipes segment has the highest usage of microdata by far. That

is not surprising since Google lets you mark up recipe content with

structured data, which, in turn, provides rich results and increased

search visibility.

The recipes segment has the highest usage of microdata by far. That

is not surprising since Google lets you mark up recipe content with

structured data, which, in turn, provides rich results and increased

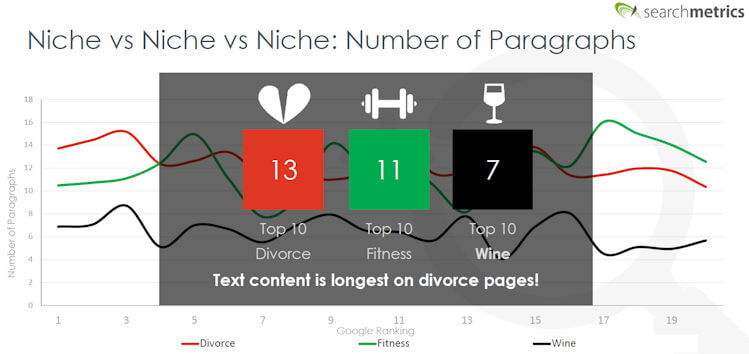

search visibility. I really like this point because it shows how you need to tailor your content based on the market you’re in.

I really like this point because it shows how you need to tailor your content based on the market you’re in. Notice how content on divorce sites tends to be longer, while fitness

is far behind. This shows that the need for longer content varies by

market.

Notice how content on divorce sites tends to be longer, while fitness

is far behind. This shows that the need for longer content varies by

market. See how the furniture market is the clear leader here, which makes

sense. When you’re selling furniture, you need to create a visual

experience with lots of choices.

See how the furniture market is the clear leader here, which makes

sense. When you’re selling furniture, you need to create a visual

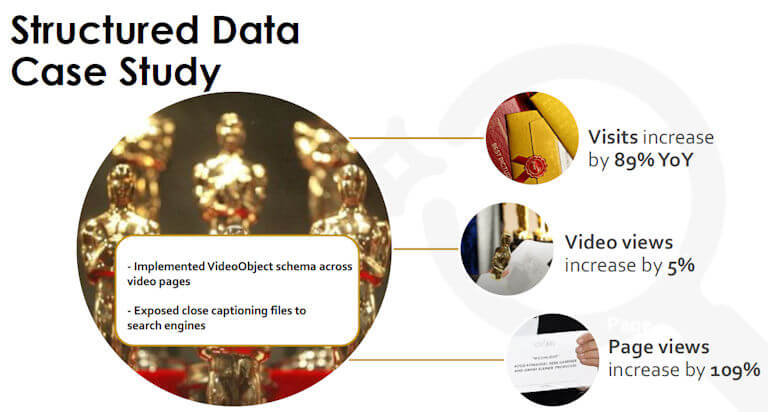

experience with lots of choices. The scale of growth of visits and page views is impressive! This is

also consistent with what I have seen across other schema.org tests that

I’ve participated in.

The scale of growth of visits and page views is impressive! This is

also consistent with what I have seen across other schema.org tests that

I’ve participated in. I am a strong proponent of the value of featured snippets. Chanelle

shared some great data from what they saw when they obtained a featured

snippet for the phrase “Beyoncé guacamole recipe”:

I am a strong proponent of the value of featured snippets. Chanelle

shared some great data from what they saw when they obtained a featured

snippet for the phrase “Beyoncé guacamole recipe”: Now, that’s a serious uptick in traffic!

Now, that’s a serious uptick in traffic! Last, but not least ABC ran tests to compare the performance of

non-AMP pages with accelerated mobile pages (AMP). Here are the user

engagement results they saw from these tests:

Last, but not least ABC ran tests to compare the performance of

non-AMP pages with accelerated mobile pages (AMP). Here are the user

engagement results they saw from these tests: Here are the improvements in traffic:

Here are the improvements in traffic: Bear in mind that AMP is not a ranking factor, but as ABC is a news

site, participation in AMP made them eligible for the AMP news carousel

on smartphone devices.

Bear in mind that AMP is not a ranking factor, but as ABC is a news

site, participation in AMP made them eligible for the AMP news carousel

on smartphone devices.